The reproducibility crisis

Question: what can be aggravating, sometimes unpleasant, often unrewarding, brings little prestige and can consume insane amounts of time, resources and effort.

Answer: repeating a research study!

Leaders in fields ranging from biomedical research to social science have said it again and again: replication matters, and so does sharing protocols. The importance of repeating experiments is central to the career of the scientist. Indeed, it is absolutely crucial, be it for a research publication or for a patent application. In order for a finding to have any significance at all, it needs to be ‘true’ more than the one time that it was done by the original research group. If not, we have to attribute it to either serendipity, sloppy dissemination or, rarely, fraud. A major result may depend on a subtlety in how the experiment was conducted that was neither communicated via a research or indeed, conference paper.

Science is facing a “reproducibility crisis” where more than two-thirds of researchers have tried and failed to reproduce another scientist’s experiments, research suggests.

The challenge of sharing protocols

My first recollection of publishing research came while doing research at the National Research Council Labs in Ottawa, Canada. It was a very exciting moment. This was the reward for weeks of toil optimizing a process for the functionalization of polymeric surfaces using remote plasma chemical vapour deposition. It turned out that publishing a paper was actually an awful lot of work in itself. It involved a number of aspects. Thinking about which journal to submit it to meant appreciating the pecking order of journals as indicated by their ‘Impact Factor’. It also meant figuring out how to convert the laboratory notes into a well organised paper that would ’pass’ peer review’ and sharing protocols so that it would be easily repeatable by other researchers!

Interpreting protocols

The ‘methods and materials’ section of a research paper provides the ‘recipe’. Rather like baking a cake, however, there is a question of human interpretation and skill inherent in being able to review another researcher’s experimental protocol and repeat it step by step. This is especially true in the life sciences where research invariably involves working with liquids. Let’s assume that you manage to extract the correct step by step protocol from the research paper. You can list the labware used and, at least in theory, re-use the same brand and type of labware, though many do not do this. More problematic are the steps that involve human intervention such as, for example, the use of a manual pipette. Even if you use the same pipette, how can you tell whether it has been calibrated in the same way as the one used in the published work. Moreover, different human operators use pipettes in different ways, not surprisingly yielding slightly different results. When you are mixing different samples and reagents, this can become quite problematic.. indeed, kit often leads to frustrated clinicians and drug developers who want solid foundations of pre-clinical research to build upon!

Experimental protocols are key when planning, performing and publishing research in many disciplines, especially in relation to the reporting of materials and methods.

Sharing protocols more readily, ideally by some electronic means in our age of the Internet of Things, obviates many potential sources of error.

Is repeatability really a problem?

Over the last few years, the University of Virginia’s Center for Open Science has run ‘The Reproducibility Project’, attempting to repeat the findings of five landmark cancer studies. You might believe this would be well established given that the whole integrity of the ‘scientific method’ is contingent upon repeatability of findings. Read the research paper, repeat the protocol that has been both peer reviewed and disseminated through top journals and ‘voila’! Except that is not what actually happened.

Concern over the reliability of the results published in scientific literature has been growing for some time. According to a survey published in the journal Nature in 2017, more than 70% of researchers have tried and failed to reproduce another scientist’s experiments. Marcus Munafo is one of them. Now, Professor of biological psychology at Bristol University, he almost gave up on a career in science when, as a PhD student, he failed to reproduce a textbook study on, of all things, ‘anxiety’.

Opinion holds that much of this is due to a human tendency to focus on the ‘exciting’ vs the rather mundane reality of ensuring repeatable, valid science. Undoubtedly, much is due to the way that science is disseminated.. it is still a slow process, even when it comes down to reviewing research that has significant potential to save lives. That sort of research has its highest impact as part of a collaboration, initially between academic institutions, then at some point between (the pharmaceutical) industry and academia. Moreover, there can be understandable barriers to sharing protocols for commercial reasons, as well as that basic human concern for competition.

Easier sharing of protocols accelerates innovation

Collaborations require experiment protocols to be shared between research groups, and repeated, and you only have to spend the briefest amount of time on social media sites for researchers, such as ResearchGate, to realise that shared protocols, including those on https://www.nature.com/nprotinvite raise as many questions as they solve when it comes to understanding how to ‘bake the cake’, though in this instance, ‘mixing or indeed, shaking, the cocktail might be more apt’.

Providing a means by which researchers can easily construct, execute, capture, and disseminate their experiment protocols, which includes the semi- or fully-automated execution of each step eliminates all the variables and solves the need of those frustrated clinicians and drug developers who want solid foundations of pre-clinical research to build upon!

Our challenge is not only to make it easier for scientists to repeat both their own and the science of other research groups but to speed up the process of bringing innovation to market. Major funders of research, both public and private sector, are trying to remove the barriers to this, be they the speed of peer review, the ease with which a protocol can be accurately and quickly shared, and the greater use of smart automation enabling scientists to focus on higher level activities. If you wonder what those higher level activities might be, take a look at the burgeoning databases of biological pathways and the effort and resources required to solve one of man’s greatest challenges.. understanding what makes us tick. Put another way, addressing the repeatability problem isn’t ’rocket science’.. we have the tools available today.. let’s use them!

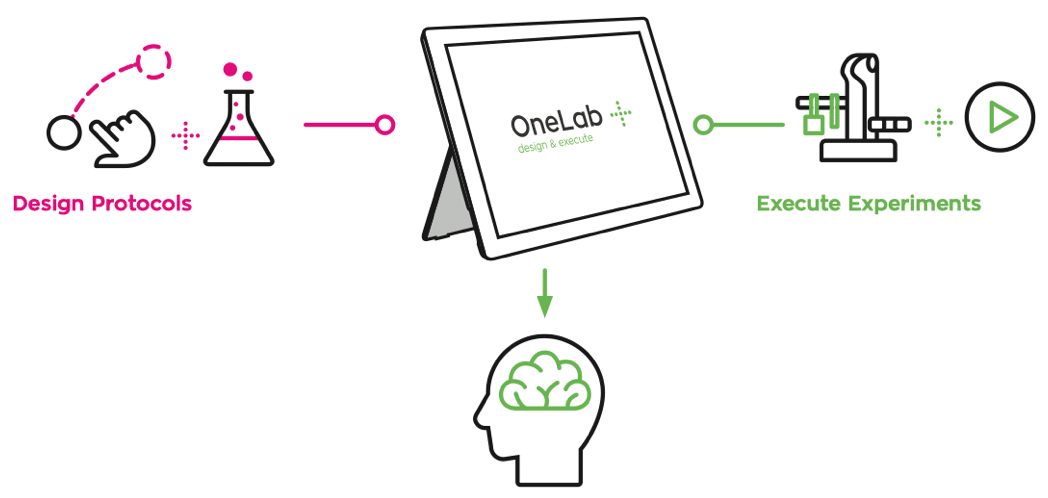

Enter OneLab

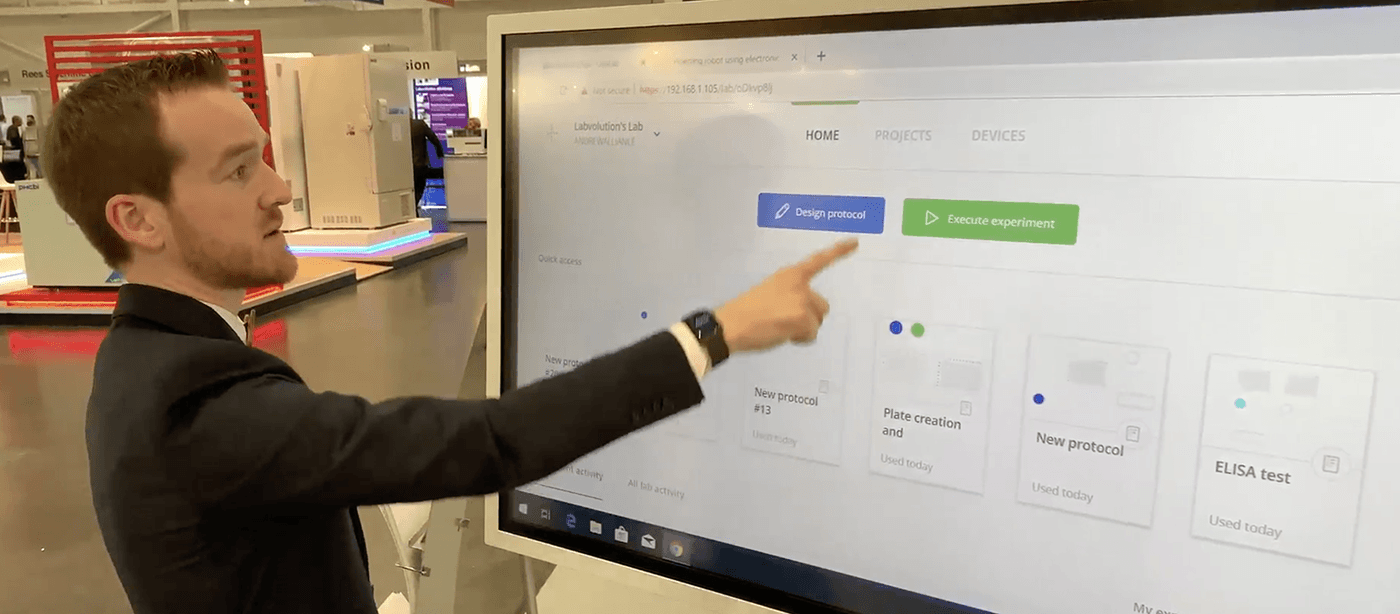

Andrew Alliance has developed a solution to solve this particular problem and it is called OneLab. It is a unique browser-based software environment enabling researchers to design, execute, and share, their own protocols, through a highly intuitive graphical interface that can then be executed step-by-step, from any PC or tablet. Go to https://onelab.andrewalliance.com today and try out OneLab for free. It can be used with your current set-up and does not require any connected devices. Sharing protocols could not be easier.

OneLab combines some powerful advantages:

Designing experiment protocols is easy:

– Intuitive graphical drag-and- drop design interface.

– Eliminate error-prone calculations for serial dilutions and concentration normalizations.

– Accelerate collaboration and training by easily sharing protocols with other researchers.

Executing them is even easier:

– Ensure correct manual execution of protocols, with your current set-up.

– Guarantee reproducibility with secure communication of protocols to OneLab compatible device(s).

– Connectivity with the award-winning Andrew+ pipetting robot and Pipette+ smart pipetting system, or any other connected device, enables researchers to achieve the highest levels of repeatability and productivity, with the added advantage of full traceability.

Learn more at www.andrewalliance.com